Ghost CMS on AWS EC2 with Terraform : An Automated Approach

July 21, 2024

T G

Tamilarasu Gurusamy

Terraform Hands On

1 / 1 Parts

Automating the previous manual procedures of creating the lambda and eventbridge rules using terraform

Series

Overview

In this post, we will see how to improve the deployment procedure of the tasks that we performed in this blog using terraform.

Deploying the infrastructure using Terraform that automates the startup and dns updates of Ghost CMS Instance reduces the error and troubleshooting that is likely needed when deploying manually.

It also helps in creating or removing resources efficiently when they are no longer needed.

Read more about Terraform here

Setting up Terraform Environment

- Create a new project directory to store all the terraform related files

- Create a new

provider.tffile - The required_providers section will be declared here

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "5.58.0"

}

}

}

provider.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "5.58.0"

}

}

}

Defining AWS Provider Configuration

- We will be providing AWS credentials using the credentials file

- Generate the

access key idandsecret_access_keyfrom IAM - Store the retrieved credentials in the following format in the file ~/.aws/credentials

[default]

aws_access_key_id=your-access-key

aws_secret_access_key=your-secret-access-key

region=closest-region

~/.aws/credentials

[default]

aws_access_key_id=your-access-key

aws_secret_access_key=your-secret-access-key

region=closest-region

- Define the statement for region in the

main.tffile - You can modify the region using the variables in

terraform.tfvarsfile Terraform.tfvarsis a file which contains all the necessary variables for the terraform

provider "aws" {

region = var.aws-required-region

}

main.tf

provider "aws" {

region = var.aws-required-region

}

Creating IAM Roles

- We need to create 3 IAM Roles for the 3 Lambda functions that will be running

Role 1: create-a-record-role

- First role will be

create-a-record-rolewhich will create an A record of ghost.domain.com whenever the instance starts with a new Public IP - We will use the resource

aws_iam_roleto create the required role - The required attributes required are name, assume_role_policy which will grant the lambda permissions to assume a role

- The important attribute is inline_policy which will grant the permissions to get the information of ec2 instance for the role which will be used by the lambda function

resource "aws_iam_role" "a-record-role" {

name = "create-a-record-role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

inline_policy {

name = "create-a-record-policy"

policy = file(var.a-record-policy-path)

}

}

iam.tf

resource "aws_iam_role" "a-record-role" {

name = "create-a-record-role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

inline_policy {

name = "create-a-record-policy"

policy = file(var.a-record-policy-path)

}

}

- The policy in inline_policy block refers to a variable

a-record-policy-pathwhich has the default value of./policies/create-a-record.json - If a different value needs to be assigned, then the value can be provided in

terraform.tfvarsfile - Since we need to keep the terraform files as readable as possible, most of the policies and python files are kept seperated

Role 2: create-volume-start-instance-role

- This role is used by a lambda function to create volume from the most recent snapshot and attach it to the instance id specified in the

terraform.tfvarsfile - This block also uses the resource aws_iam_role using the policy from a variable volume-start-instance-policy-path which contains the path of the policy file

resource "aws_iam_role" "create-volume-instance-role" {

name = "create-volume-start-instance-role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

inline_policy {

name = "create-volume-start-instance-policy"

policy = file(var.volume-start-instance-policy-path)

}

}

iam.tf

resource "aws_iam_role" "create-volume-instance-role" {

name = "create-volume-start-instance-role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

inline_policy {

name = "create-volume-start-instance-policy"

policy = file(var.volume-start-instance-policy-path)

}

}

Role 3: snap-and-delete-volume-role

- This role will be used by a lambda function to create a snapshot and delete the volume currently attached to the instance

- Will use the aws_iam_role using the policy path specified in the variable snap-and-delete-volume-policy-path

resource "aws_iam_role" "snap-and-delete-volume-role" {

name = "snap-and-delete-volume-role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

inline_policy {

name = "snap-and-delete-volume-policy"

policy = file(var.snap-and-delete-volume-policy-path)

}

}

iam.tf

resource "aws_iam_role" "snap-and-delete-volume-role" {

name = "snap-and-delete-volume-role"

assume_role_policy = jsonencode({

Version = "2012-10-17",

Statement = [

{

Effect = "Allow",

Principal = {

Service = "lambda.amazonaws.com"

},

Action = "sts:AssumeRole"

}

]

})

inline_policy {

name = "snap-and-delete-volume-policy"

policy = file(var.snap-and-delete-volume-policy-path)

}

}

Creating Lambda functions

- We need to create 3 Lambda functions which will be performing 3 different tasks

1. create-a-record

- This lambda function creates the A record with the new ip when a instance reboots

- First we need to create a template file which has values to be replaced like

- aws_required_region

- domain

- instance-id

- To use a template in terraform the file extension needs to be ending in .tpl

import requests

import boto3

import os

ec2_client = boto3.client('ec2', region_name='${aws_required_region}')

access_token = os.environ.get('NETLIFY_ACCESS_TOKEN')

domain = "${domain}"

instance_id = '${instance-id}'

record_type = "A"

record_name = "ghost.${domain}"

def get_instance_public_ip(instance_id):

# Describe instance

response = ec2_client.describe_instances(

InstanceIds=[instance_id]

)

# Extract public IP address

public_ip = None

for reservation in response['Reservations']:

for instance in reservation['Instances']:

public_ip = instance.get('PublicIpAddress')

return public_ip

def get_netlify_dns_zone_id(access_token, domain):

# Define the Netlify API endpoint for DNS zones

api_endpoint = "https://api.netlify.com/api/v1/dns_zones"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Send a GET request to retrieve DNS zones

response = requests.get(api_endpoint, headers=headers)

# Check if the request was successful

if response.status_code == 200:

dns_zones = response.json()

# Search for the domain and return its DNS zone ID

for zone in dns_zones:

if zone['name'] == domain:

return zone['id']

print(f"Domain '{domain}' not found in Netlify DNS zones.")

return zone['id']

else:

print(f"Failed to fetch DNS zones. Status code: {response.status_code}")

return None

def get_record_id(access_token, zone_id, record_type, record_name):

# Define the Netlify API endpoint for retrieving DNS records

api_endpoint = f"https://api.netlify.com/api/v1/dns_zones/{zone_id}/dns_records"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Send a GET request to retrieve DNS records

response = requests.get(api_endpoint, headers=headers)

# Check if the request was successful

if response.status_code == 200:

dns_records = response.json()

# Search for the desired record and return its ID

for record in dns_records:

if record['type'] == record_type and record['hostname'] == record_name:

return record['id']

print(f"Record of type '{record_type}' and name '{record_name}' not found.")

return None

else:

print(f"Failed to fetch DNS records. Status code: {response.status_code}")

return None

def delete_dns_record(access_token, zone_id, dns_record_id):

# Define the Netlify API endpoint for deleting a DNS record

api_endpoint = f"https://api.netlify.com/api/v1/dns_zones/{zone_id}/dns_records/{dns_record_id}"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Send a DELETE request to delete the DNS record

response = requests.delete(api_endpoint, headers=headers)

# Check if the request was successful

if response.status_code == 204:

print("DNS record deleted successfully")

else:

print(f"Failed to delete DNS record. Status code: {response.status_code}, Error: {response.text}")

def create_a_record(access_token, record_name, zone_id,public_ip):

# Define the Netlify API endpoint for adding DNS records

api_endpoint = f"https://api.netlify.com/api/v1/dns_zones/{zone_id}/dns_records"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Define the payload for creating the A record

payload = {

"type": "A",

"hostname": record_name,

"value": public_ip

}

# Send a POST request to create the A record

response = requests.post(api_endpoint, headers=headers, json=payload)

# Check if the request was successful

if response.status_code == 201:

print("A record created successfully")

else:

print(f"Failed to create A record. Status code: {response.status_code}, Error: {response.text}")

def lambda_handler(event,context):

public_ip = get_instance_public_ip(instance_id)

print(f"The public ip of instance-id {instance_id} is {public_ip}")

zone_id = get_netlify_dns_zone_id(access_token, domain)

print(f"DNS Zone ID for domain '{domain}': {zone_id}")

dns_record_id = get_record_id(access_token,zone_id,record_type,record_name)

print(f"The DNS Record ID of the Record {record_name} is {dns_record_id}")

delete_dns_record(access_token,zone_id, dns_record_id)

create_a_record(access_token, record_name,zone_id, public_ip)

create-a-record.py.tpl

import requests

import boto3

import os

ec2_client = boto3.client('ec2', region_name='${aws_required_region}')

access_token = os.environ.get('NETLIFY_ACCESS_TOKEN')

domain = "${domain}"

instance_id = '${instance-id}'

record_type = "A"

record_name = "ghost.${domain}"

def get_instance_public_ip(instance_id):

# Describe instance

response = ec2_client.describe_instances(

InstanceIds=[instance_id]

)

# Extract public IP address

public_ip = None

for reservation in response['Reservations']:

for instance in reservation['Instances']:

public_ip = instance.get('PublicIpAddress')

return public_ip

def get_netlify_dns_zone_id(access_token, domain):

# Define the Netlify API endpoint for DNS zones

api_endpoint = "https://api.netlify.com/api/v1/dns_zones"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Send a GET request to retrieve DNS zones

response = requests.get(api_endpoint, headers=headers)

# Check if the request was successful

if response.status_code == 200:

dns_zones = response.json()

# Search for the domain and return its DNS zone ID

for zone in dns_zones:

if zone['name'] == domain:

return zone['id']

print(f"Domain '{domain}' not found in Netlify DNS zones.")

return zone['id']

else:

print(f"Failed to fetch DNS zones. Status code: {response.status_code}")

return None

def get_record_id(access_token, zone_id, record_type, record_name):

# Define the Netlify API endpoint for retrieving DNS records

api_endpoint = f"https://api.netlify.com/api/v1/dns_zones/{zone_id}/dns_records"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Send a GET request to retrieve DNS records

response = requests.get(api_endpoint, headers=headers)

# Check if the request was successful

if response.status_code == 200:

dns_records = response.json()

# Search for the desired record and return its ID

for record in dns_records:

if record['type'] == record_type and record['hostname'] == record_name:

return record['id']

print(f"Record of type '{record_type}' and name '{record_name}' not found.")

return None

else:

print(f"Failed to fetch DNS records. Status code: {response.status_code}")

return None

def delete_dns_record(access_token, zone_id, dns_record_id):

# Define the Netlify API endpoint for deleting a DNS record

api_endpoint = f"https://api.netlify.com/api/v1/dns_zones/{zone_id}/dns_records/{dns_record_id}"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Send a DELETE request to delete the DNS record

response = requests.delete(api_endpoint, headers=headers)

# Check if the request was successful

if response.status_code == 204:

print("DNS record deleted successfully")

else:

print(f"Failed to delete DNS record. Status code: {response.status_code}, Error: {response.text}")

def create_a_record(access_token, record_name, zone_id,public_ip):

# Define the Netlify API endpoint for adding DNS records

api_endpoint = f"https://api.netlify.com/api/v1/dns_zones/{zone_id}/dns_records"

# Set up headers with authorization

headers = {

"Authorization": f"Bearer {access_token}"

}

# Define the payload for creating the A record

payload = {

"type": "A",

"hostname": record_name,

"value": public_ip

}

# Send a POST request to create the A record

response = requests.post(api_endpoint, headers=headers, json=payload)

# Check if the request was successful

if response.status_code == 201:

print("A record created successfully")

else:

print(f"Failed to create A record. Status code: {response.status_code}, Error: {response.text}")

def lambda_handler(event,context):

public_ip = get_instance_public_ip(instance_id)

print(f"The public ip of instance-id {instance_id} is {public_ip}")

zone_id = get_netlify_dns_zone_id(access_token, domain)

print(f"DNS Zone ID for domain '{domain}': {zone_id}")

dns_record_id = get_record_id(access_token,zone_id,record_type,record_name)

print(f"The DNS Record ID of the Record {record_name} is {dns_record_id}")

delete_dns_record(access_token,zone_id, dns_record_id)

create_a_record(access_token, record_name,zone_id, public_ip)

- Then we need to use the

resource local_fileto replace the values with the values from the terraform.tfvars file and export it to a file inside the python folder namedcreate-a-record.py - Next step would be to archive the file using the resource archive_file and place the archive file inside the folder python

- Since the requests library is not available by default in the lambda python runtime, we will have to import it as layer, so we use the resource aws_lambda_layer_version to upload the layer zip file which contains the files required for request module to be imported in the lambda function

- Final step would be to create the lambda function using the resource aws_lambda_function

- It requires

- filename : which is the archive file that we created

- runtime: the language in which the lambda function is written

- function_name: name for the lambda function

- role: the role which grants permission that we created earlier

- handler: the entrypoint where the lambda function starts running from

- layers: the layers to use

- timeout : we need to increase the timeout to 10 seconds which is 3 seconds by default

- environment: since this function needs permissions to change the A record of the domain which is managed by netlify the access token generated from netlify is required

resource "local_file" "create-a-record-lambda" {

content = templatefile("${var.create-a-record-lambda-file}", {

aws_required_region = var.aws-required-region,

domain = var.domain,

instance-id = var.instance-id

})

filename = "${path.module}/python/create-a-record.py"

}

data "archive_file" "create-a-record-zip" {

type = "zip"

output_path = "${path.module}/python/create-a-record.zip"

source_file = local_file.create-a-record-lambda.filename

}

resource "aws_lambda_layer_version" "requests_layer" {

filename = "./python/requests_layer.zip"

layer_name = "requests_layer"

compatible_runtimes = ["python3.10"]

}

resource "aws_lambda_function" "create-a-record-lambda" {

filename = "${path.module}/python/create-a-record.zip"

runtime = "python3.10"

function_name = "create-a-record"

role = aws_iam_role.a-record-role.arn

handler = "create-a-record.lambda_handler"

layers = [aws_lambda_layer_version.requests_layer.arn]

timeout = 10

environment {

variables = {

NETLIFY_ACCESS_TOKEN = var.netlify_access_token

}

}

}

lambda.tf

resource "local_file" "create-a-record-lambda" {

content = templatefile("${var.create-a-record-lambda-file}", {

aws_required_region = var.aws-required-region,

domain = var.domain,

instance-id = var.instance-id

})

filename = "${path.module}/python/create-a-record.py"

}

data "archive_file" "create-a-record-zip" {

type = "zip"

output_path = "${path.module}/python/create-a-record.zip"

source_file = local_file.create-a-record-lambda.filename

}

resource "aws_lambda_layer_version" "requests_layer" {

filename = "./python/requests_layer.zip"

layer_name = "requests_layer"

compatible_runtimes = ["python3.10"]

}

resource "aws_lambda_function" "create-a-record-lambda" {

filename = "${path.module}/python/create-a-record.zip"

runtime = "python3.10"

function_name = "create-a-record"

role = aws_iam_role.a-record-role.arn

handler = "create-a-record.lambda_handler"

layers = [aws_lambda_layer_version.requests_layer.arn]

timeout = 10

environment {

variables = {

NETLIFY_ACCESS_TOKEN = var.netlify_access_token

}

}

}

2. snap-and-delete-lambda

- This lambda will create a snapshot and delete the volume associated with the instance id specified in the

terrform.tfvarsfile - We follow the same procedure like the previous lambda function creation

- Create a file

snap-and-delete-volume.pyusing the templatefile function in terraform - Create an archive file using the same name

- Create a resource aws_lambda_function with the same parameters as above except the layers

resource "local_file" "snap-and-delete-file" {

content = templatefile("${var.snap-and-delete-volume-lambda-file}", {

aws-required-region = var.aws-required-region,

domain = var.domain,

instance-id = var.instance-id

})

filename = "${path.module}/python/snap-and-delete-volume.py"

}

data "archive_file" "snap-and-delete-zip" {

type = "zip"

output_path = "${path.module}/python/snap-and-delete-volume.zip"

source_file = local_file.snap-and-delete-file.filename

}

resource "aws_lambda_function" "snap-and-delete-lambda" {

filename = "${path.module}/python/snap-and-delete-volume.zip"

runtime = "python3.10"

function_name = "snap-and-delete-volume"

role = aws_iam_role.snap-and-delete-volume-role.arn

handler = "snap-and-delete-volume.lambda_handler"

timeout = 10

}

lambda.tf

resource "local_file" "snap-and-delete-file" {

content = templatefile("${var.snap-and-delete-volume-lambda-file}", {

aws-required-region = var.aws-required-region,

domain = var.domain,

instance-id = var.instance-id

})

filename = "${path.module}/python/snap-and-delete-volume.py"

}

data "archive_file" "snap-and-delete-zip" {

type = "zip"

output_path = "${path.module}/python/snap-and-delete-volume.zip"

source_file = local_file.snap-and-delete-file.filename

}

resource "aws_lambda_function" "snap-and-delete-lambda" {

filename = "${path.module}/python/snap-and-delete-volume.zip"

runtime = "python3.10"

function_name = "snap-and-delete-volume"

role = aws_iam_role.snap-and-delete-volume-role.arn

handler = "snap-and-delete-volume.lambda_handler"

timeout = 10

}

3. create-volume-start-instance-lambda

- This lambda function will create a volume from the latest of the snapshots created using the above lambda function

- This lambda will require a variable like root_device_name which is /dev/sda1 by default

- We can change this variable in terraform.tfvars file

resource "local_file" "create-volume-instance" {

content = templatefile("${var.create-volume-instance-lambda-file}", {

aws-required-region = var.aws-required-region,

domain = var.domain,

instance-id = var.instance-id,

root-device-name = var.root-device-name

})

filename = "${path.module}/python/create-volume-instance.py"

}

data "archive_file" "lambda_zip" {

type = "zip"

output_path = "${path.module}/python/create-volume-instance.zip"

source_file = local_file.create-volume-instance.filename

}

resource "aws_lambda_function" "create-volume-instance-lambda" {

filename = "${path.module}/python/create-volume-instance.zip"

runtime = "python3.10"

function_name = "create-volume-start-instance"

role = aws_iam_role.create-volume-instance-role.arn

handler = "create-volume-instance.lambda_handler"

timeout = 10

}

lambda.tf

resource "local_file" "create-volume-instance" {

content = templatefile("${var.create-volume-instance-lambda-file}", {

aws-required-region = var.aws-required-region,

domain = var.domain,

instance-id = var.instance-id,

root-device-name = var.root-device-name

})

filename = "${path.module}/python/create-volume-instance.py"

}

data "archive_file" "lambda_zip" {

type = "zip"

output_path = "${path.module}/python/create-volume-instance.zip"

source_file = local_file.create-volume-instance.filename

}

resource "aws_lambda_function" "create-volume-instance-lambda" {

filename = "${path.module}/python/create-volume-instance.zip"

runtime = "python3.10"

function_name = "create-volume-start-instance"

role = aws_iam_role.create-volume-instance-role.arn

handler = "create-volume-instance.lambda_handler"

timeout = 10

}

Creating Eventbridge Rules

1. ec2-ghost-stop

- This rule will be triggered when the instance is stopped which will trigger the snap-and-delete-volume-lambda

- Eventbride is still known as cloudwatch events in terraform, so dont be confused with that

- First we need to create the cloudwatch_event_rule which decides the event pattern like when the event will be triggered

- Before that we need a templatefile which will contain the event trigger in json

{

"source": ["aws.ec2"],

"detail-type": ["EC2 Instance State-change Notification"],

"detail": {

"state": ["stopped"],

"instance-id": ["${instance-id}"]

}

}

ec2-ghost-stop.json.tpl

{

"source": ["aws.ec2"],

"detail-type": ["EC2 Instance State-change Notification"],

"detail": {

"state": ["stopped"],

"instance-id": ["${instance-id}"]

}

}

- Then the variables need to replaced using templatefile function in terrafrom with the variables obtained from terraform.tfvars

- Then using the resource cloudwatch_event_target, link the rule created above and the lambda function that needs to be triggered when the event happens

resource "aws_cloudwatch_event_rule" "ec2-ghost-stop" {

name = "ec2-ghost-stop"

description = "Trigger the create-volume-start-instance lambda when the instance stops"

event_pattern = templatefile(var.ec2-ghost-stop-rule-path, {

instance-id = var.instance-id

})

}

resource "aws_cloudwatch_event_target" "ec2-ghost-stop-target" {

rule = aws_cloudwatch_event_rule.ec2-ghost-stop.name

arn = aws_lambda_function.snap-and-delete-lambda.arn

}

eventbridge.tf

resource "aws_cloudwatch_event_rule" "ec2-ghost-stop" {

name = "ec2-ghost-stop"

description = "Trigger the create-volume-start-instance lambda when the instance stops"

event_pattern = templatefile(var.ec2-ghost-stop-rule-path, {

instance-id = var.instance-id

})

}

resource "aws_cloudwatch_event_target" "ec2-ghost-stop-target" {

rule = aws_cloudwatch_event_rule.ec2-ghost-stop.name

arn = aws_lambda_function.snap-and-delete-lambda.arn

}

2. ec2-ghost-start

- This rule will be triggered when the particular instance starts and be in the running state

- This rule will in turn trigger the create-a-record-lambda function to update the A record of the domain with the new IP

resource "aws_cloudwatch_event_rule" "ec2-ghost-start" {

name = "ec2-ghost-start"

description = "Trigger the create-a-record lambda when the instance starts"

event_pattern = templatefile(var.ec2-ghost-start-rule-path, {

instance-id = var.instance-id

})

}

resource "aws_cloudwatch_event_target" "ec2-ghost-start-target" {

rule = aws_cloudwatch_event_rule.ec2-ghost-start.name

arn = aws_lambda_function.create-a-record-lambda.arn

}

ec2-ghost-start.json.tpl

resource "aws_cloudwatch_event_rule" "ec2-ghost-start" {

name = "ec2-ghost-start"

description = "Trigger the create-a-record lambda when the instance starts"

event_pattern = templatefile(var.ec2-ghost-start-rule-path, {

instance-id = var.instance-id

})

}

resource "aws_cloudwatch_event_target" "ec2-ghost-start-target" {

rule = aws_cloudwatch_event_rule.ec2-ghost-start.name

arn = aws_lambda_function.create-a-record-lambda.arn

}

3. Granting the Eventbridge rules permission to invoke lambda

- To grant lambda permissions to invoke lambda, we use resource aws_lambda_permission to create two blocks

- First block will grant the ec2-ghost-stop rule to invoke the snap-and-delete-volume-lambda function

resource "aws_lambda_permission" "allow_cloudwatch-ec2-ghost-stop" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.snap-and-delete-lambda.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.ec2-ghost-stop.arn

}

eventbridge.tf

resource "aws_lambda_permission" "allow_cloudwatch-ec2-ghost-stop" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.snap-and-delete-lambda.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.ec2-ghost-stop.arn

}

- Next block will grant the ec2-ghost-start rule to invoke the create-a-record-lambda function

resource "aws_lambda_permission" "allow_cloudwatch-ec2-ghost-start" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.create-a-record-lambda.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.ec2-ghost-start.arn

}

eventbridge.tf

resource "aws_lambda_permission" "allow_cloudwatch-ec2-ghost-start" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.create-a-record-lambda.function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.ec2-ghost-start.arn

}

Creating the variables.tf file

variable "aws-required-region" {

description = "The region where the aws resources are created"

default = "ap-south-1"

}

variable "instance-id" {

description = "Id of the instance which runs ghost"

}

variable "domain" {

description = "Domain of the ghost instance"

}

variable "a-record-policy-path" {

description = "The path containing the policy document for create-a-record-role"

default = "./policies/create-a-record.json"

}

variable "volume-start-instance-policy-path" {

description = "The path containing the policy document for create-volume-start-instace-role"

default = "./policies/create-volume-start-instance.json"

}

variable "snap-and-delete-volume-policy-path" {

description = "The path containing the policy document for snap-and-delete-volume-role"

default = "./policies/snap-and-delete-volume.json"

}

variable "create-a-record-lambda-file" {

description = "Lambda function file for create-a-record"

default = "./python/create-a-record.py.tpl"

}

variable "create-volume-instance-lambda-file" {

description = "Lambda function file for create volume and start the instance"

default = "./python/create-volume-start-instance.py.tpl"

}

variable "snap-and-delete-volume-lambda-file" {

description = "Lambda function file for snap and delete volume"

default = "./python/snap-and-delete-volume.py.tpl"

}

variable "root-device-name" {

description = "simple"

default = "/dev/sda1"

}

variable "ec2-ghost-start-rule-path" {

default = "./eventbridge-rules/ec2-ghost-start.json.tpl"

}

variable "ec2-ghost-stop-rule-path" {

default = "./eventbridge-rules/ec2-ghost-stop.json.tpl"

}

variable "netlify_access_token" {

description = "The access token of netlify to change the dns records of the domain"

}

variables.tf

variable "aws-required-region" {

description = "The region where the aws resources are created"

default = "ap-south-1"

}

variable "instance-id" {

description = "Id of the instance which runs ghost"

}

variable "domain" {

description = "Domain of the ghost instance"

}

variable "a-record-policy-path" {

description = "The path containing the policy document for create-a-record-role"

default = "./policies/create-a-record.json"

}

variable "volume-start-instance-policy-path" {

description = "The path containing the policy document for create-volume-start-instace-role"

default = "./policies/create-volume-start-instance.json"

}

variable "snap-and-delete-volume-policy-path" {

description = "The path containing the policy document for snap-and-delete-volume-role"

default = "./policies/snap-and-delete-volume.json"

}

variable "create-a-record-lambda-file" {

description = "Lambda function file for create-a-record"

default = "./python/create-a-record.py.tpl"

}

variable "create-volume-instance-lambda-file" {

description = "Lambda function file for create volume and start the instance"

default = "./python/create-volume-start-instance.py.tpl"

}

variable "snap-and-delete-volume-lambda-file" {

description = "Lambda function file for snap and delete volume"

default = "./python/snap-and-delete-volume.py.tpl"

}

variable "root-device-name" {

description = "simple"

default = "/dev/sda1"

}

variable "ec2-ghost-start-rule-path" {

default = "./eventbridge-rules/ec2-ghost-start.json.tpl"

}

variable "ec2-ghost-stop-rule-path" {

default = "./eventbridge-rules/ec2-ghost-stop.json.tpl"

}

variable "netlify_access_token" {

description = "The access token of netlify to change the dns records of the domain"

}

Creating Terraform.tfvars file

- This file will contain all the necessary variables for the terraform resources to work

- All the variables inside variables.tf can be changed

- But the required ones are as follows

aws-required-region = "ap-south-1"

a-record-policy-path = "./policies/create-a-record.json"

volume-start-instance-policy-path = "./policies/create-volume-start-instance.json"

snap-and-delete-volume-policy-path = "./policies/snap-and-delete-volume.json"

instance-id = "instance-id"

domain = "your-domain"

netlify_access_token = "netlify-access-token"

aws-required-region = "ap-south-1"

a-record-policy-path = "./policies/create-a-record.json"

volume-start-instance-policy-path = "./policies/create-volume-start-instance.json"

snap-and-delete-volume-policy-path = "./policies/snap-and-delete-volume.json"

instance-id = "instance-id"

domain = "your-domain"

netlify_access_token = "netlify-access-token"

Complete Source Code

- For the complete source code refer to my repository here

Deploying the infrastructure with Terraform

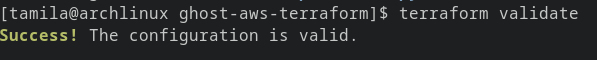

- Before deploying the infrastructure, we will validate whether the configuration is valid using this command

terraform validate

terraform validate

- If there are no errors, it will display the following message

Terraform validate command

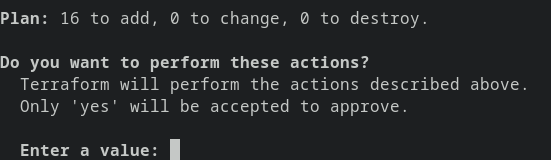

- To deploy the infrastructure with 1 command

terraform apply

terraform apply

- The command will display the list of changes that will be made and prompt like this :

Terraform apply command

- Type yes and press Enter

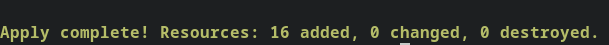

- Once all the resources are deployed, it will display the following message

Terraform apply approved

- Once deployed, we can check each respective sections in aws console, whether it is working as expected.

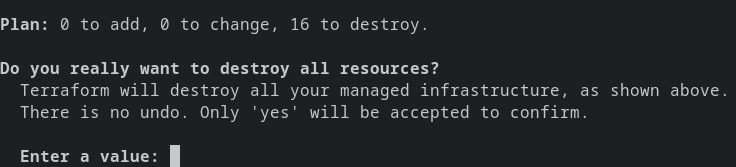

- To destroy the created resources using this terraform configuration

terraform destroy

terraform destroy

- The above command will provide a prompt like this

Terraform destroy command

- Once verified the resources that will be deleted, enter yes and press Enter

- Once successful, it will display a message like this

Terraform destroy command approved